This bug is fixed in Java 23u10

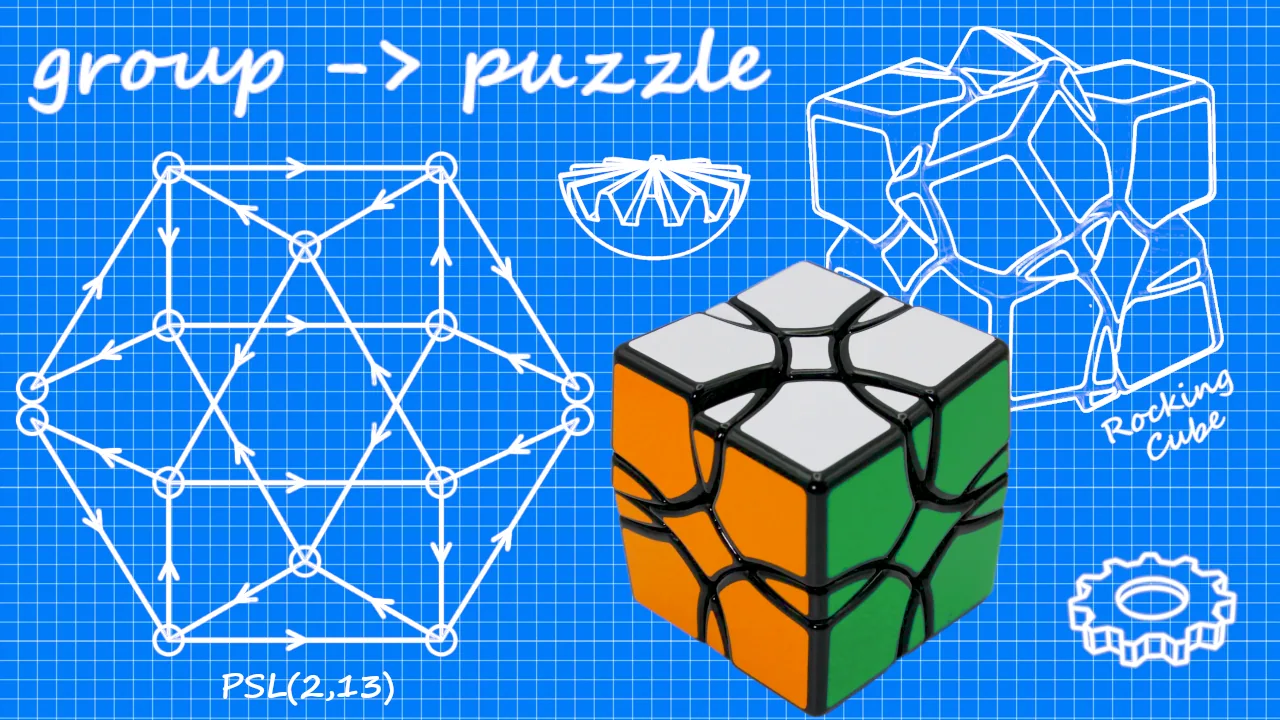

I came across an apparent bug in java.util.zip.ZipInputStream where reading a certain type of zip file causes an exception:

java.util.zip.ZipException: invalid entry size (expected 0 but got 199 bytes)

at java.util.zip.ZipInputStream.readEnd(ZipInputStream.java:384)

at java.util.zip.ZipInputStream.read(ZipInputStream.java:196)

at java.util.zip.InflaterInputStream.read(InflaterInputStream.java:122)

The file in question reads fine using archiving utilities and Java’s ZipFile, so the issue is likely rooted in ZipInputStream. My application requires reading the zip file sequentially, so ZipInputStream is my only option besides a third-party library. I decided to search for a solution using vanilla Java rather than pulling in a new dependency.

Sample ZIP

A sample file that triggers the bug can be found on GitHub.

The problem

Let’s take a closer look at what causes the exception:

1 @Test public void readProblematicZip() throws Exception {

2 try (InputStream fileStream = getClass().getResourceAsStream("/Content.zip");

3 InputStream bufferedStream = new BufferedInputStream(fileStream);

4 ZipInputStream zipStream = new ZipInputStream(bufferedStream)) {

5

6 ZipEntry entry = zipStream.getNextEntry();

7 System.out.println("Entry name: " + entry.getName());

8 System.out.println("Entry compressed size: " + entry.getCompressedSize());

9 System.out.println("Entry uncompressed size: " + entry.getSize());

10

11 // Consume all bytes

12 StringBuilder content = new StringBuilder();

13 try {

14 for (int b; (b = zipStream.read()) >= 0;) content.append((char) b);

15 } catch (Exception e) {

16 System.err.println("Stream failed at byte " + content.length());

17 e.printStackTrace();

18 }

19 }

20 }

Running this test produces the following output:

Entry name: Content.txt

Entry compressed size: -1

Entry uncompressed size: -1

Stream failed at byte 199

java.util.zip.ZipException: invalid entry size (expected 0 but got 199 bytes)

The ZipEntry derived from the local file header reads OK, but indicates unknown file sizes, which means the actual sizes will follow the compressed data in a separate descriptor. The code reports that the stream fails at byte 199 - which is in fact the length of Content.txt - so the exception is thrown after the content has been fully consumed.

Let’s see where the exception is generated in ZipInputStream:

1 /*

2 * Reads end of deflated entry as well as EXT descriptor if present.

3 */

4 private void readEnd(ZipEntry e) throws IOException {

5

6 // ...... code omitted which populates ZipEntry e ......

7

8 if (e.size != inf.getBytesWritten()) {

9 throw new ZipException(

10 "invalid entry size (expected " + e.size +

11 " but got " + inf.getBytesWritten() + " bytes)");

12 }

13 }

It seems that e.size now equals zero, unlike prior to streaming, when it reported -1 for unknown. So something is causing ZipInputStream to populate the size field with zero.

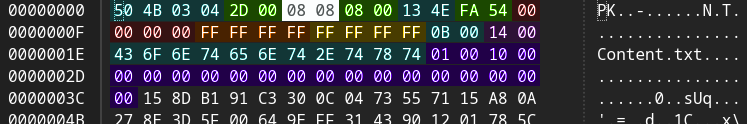

Let’s take a look at the local file header:

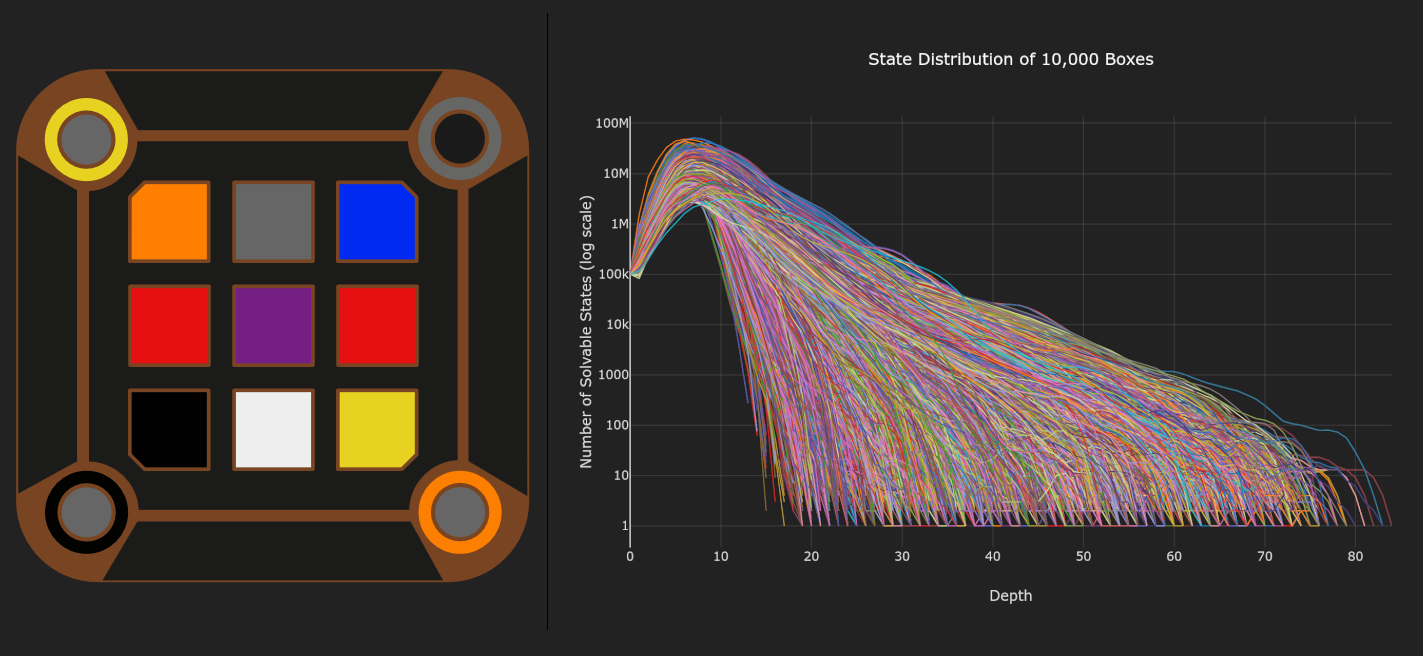

Two things stick out - first, the general purpose bit flag (highlighted white) has bit (#3) set, meaning a trailing data descriptor will be present containing actual file sizes. Second, and strangely, the csize (orange) and uncompressed size (yellow) fields are set to 0xFFFFFFFF, indicating that this is a ZIP64 record.

Ordinarily a file record will not be written in ZIP64 format by an author unless necessary (when its size exceeds 4 GiB). But whoever wrote this unusual zip seems to be writing ZIP64 records by default. According to APPNOTE.TXT, this is indeed allowed:

4.3.9.2 When compressing files, compressed and uncompressed sizes

SHOULD be stored in ZIP64 format (as 8 byte values) when a

file's size exceeds 0xFFFFFFFF. However ZIP64 format MAY be

used regardless of the size of a file.

The ZIP64 size and csize in the extra data (shown above in purple) are equal to zero, which means their actual sizes will be encoded in the EXT descriptor.

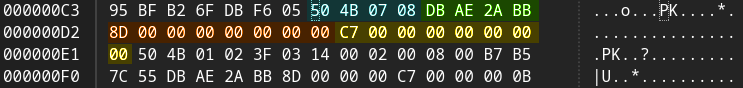

As expected (since this is a ZIP64 record), the csize (orange) and size (yellow) are written as 8-bit integers per the specification. Compressed size is equal to 0x8D or 141 bytes, and uncompressed size 0xC7 or 199 bytes. So what is going on with the Java exception?

If we add some more debugging to the test code, we can find that post-decompression, the ZipEntry sizes now contain actual values populated by the readEnd() method of ZipInputStream.

Stream failed at byte 199

** New entry compressed size: 141

** New entry uncompressed size: 0

java.util.zip.ZipException: invalid entry size (expected 0 but got 199 bytes)

Why is compressed size correct but uncompressed size set to zero?

1if ((flag & 8) == 8) {

2 /* "Data Descriptor" present */

3 if (inf.getBytesWritten() > ZIP64_MAGICVAL ||

4 inf.getBytesRead() > ZIP64_MAGICVAL) {

5 // ZIP64 format

6 readFully(tmpbuf, 0, ZIP64_EXTHDR);

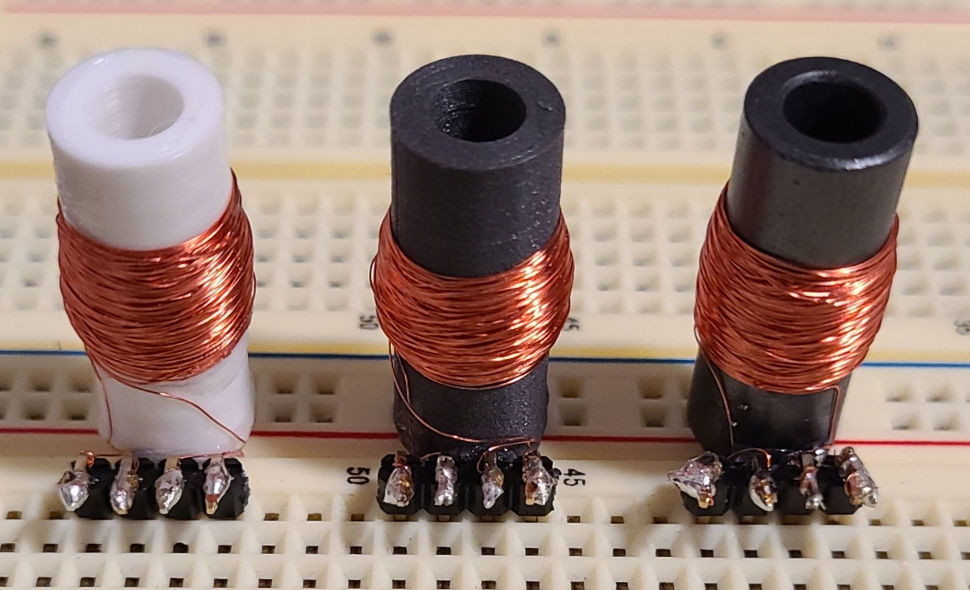

In readEnd(), we see that the condition for reading the data descriptor in ZIP64 format is whether or not the inflator’s reported bytes read or written exceed a certain magic value (which happens to be 2³²). This is not true of the sample archive, so the data descriptor is instead mis-interpreted as in an ordinary 32-bit zip with 4 byte integers, hence the 141 and 0 values:

This causes two problems in ZipInputStream - not only does the size check fail, causing an exception, but the stream position also becomes misaligned by 8 bytes.

A solution using reflection

It is possible to recover from the exception by manually advancing the stream and tweaking the internal state of the ZipInputStream.

This code uses reflection to grab the pushback stream that backs ZipInputStream, then reads 8 bytes to advance it to the correct position had the bug not occured:

1// Grab pushback stream

2Field inF = FilterInputStream.class.getDeclaredField("in"); inF.setAccessible(true);

3PushbackInputStream in = (PushbackInputStream) inF.get(zipStream);

4

5for (int i = 0; i < 8; i++) in.read(); // Read 8 extra bytes to compensate footer

Now to ensure the stream is ready to read a new entry, we must modify some internal variables:

1// Close the entry manually

2Field f = ZipInputStream.class.getDeclaredField("entryEOF");

3f.setAccessible(true);

4f.set(zipStream, true);

5f = ZipInputStream.class.getDeclaredField("entry");

6f.setAccessible(true);

7f.set(zipStream, null);

The only remaining task is to package the fix into a convenient wrapper class that automatically recovers from the exception. This poses a question - how do we know when / when not to apply the fix?

Luckily, this bug presents itself in the exact same way whenever a ZIP64 record with less than 2³² byte file sizes is encountered. Since every possible little-endian unsigned long under 2³² will always end with 4 zeroes, csize will always equal zero when the bug is triggered. So reliably detecting this particular error condition is as simple as checking the ZipException text for the following prefix:

1e.getMessage().startsWith("invalid entry size (expected 0 ")

Finally, all of this code is wrapped into the read() methods of a ZipInputStream subclass to automatically execute the hotfix whenever the appropriate exception is encountered. I added an additional reflective check for CRC correctness since the stream throws an exception before the usual check occurs. The complete code is available on GitHub:

https://github.com/cjgriscom/ZipInputStreamPatch64

Conclusion

This patch is not ideal for all applications; the particular reflective access used here is likely not compatible with all JVMs. This solution works fine for applications with a known static runtime (like mine, where I’m stuck on OpenJDK 8 forever), but an alternative approach may be preferable, such as a third party ZIP library, or directly modifying ZipInputStream (if licensing permits).

This patch has been tested and works on JDK 1.8.0_341 and 11.0.17. It does not work as-is on OpenJDK 19 or GraalVM.